Elixir is a strong dynamic language : it checks types at run time, enabling some of its most powerful features like pattern matching and macros. The “strong” part tells us that type conversion needs to be explicit, unlike JavaScript, which aims to always do “something” with your code, and happily converts between types.

These properties make Elixir a flexible and productive language, but open the possibility for bugs such as passing the wrong type to a function during run time, crashing the process. The likelihood of such bugs increases as a project’s code base grows.

Many of these bugs can be caught by using static analysis - checking as many assumptions as possible before executing the code. Dialyzer is a great tool for this, using Elixir and Erlang’s built in type system to catch type errors and unreachable code. A welcome side effect of using dialyzer is that it encourages extensive use of the type system available in Elixir, increasing readability and maintainability.

A robust Elixir or Erlang codebase can add Dialyzer in its CI pipeline to increase confidence in code changes and prevent regressions. At Massdriver we host our code on GitHub and make extensive use of GitHub Actions for CI. In this post I’ll show you how to set up Dialyzer in a GitHub Action while avoiding some common mistakes..

Time is Money

The example GitHub Action on the dialyxir README is a good starting point:

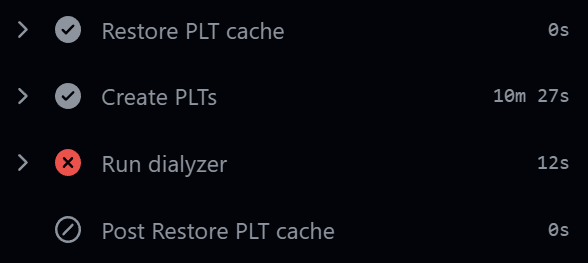

This is a good start, but depending on the size of your code base and dependencies, the “Create PLTs” step which builds the Persistent Lookup Tables - the static analysis output - can take a long time. For the main Massdriver application, this step can take over 10 minutes for a complete rebuild! But if the next step, Run dialyzer, fails, the cache is not saved, so the next run will have to rebuild the PLT from scratch - again!

Fun with Caches

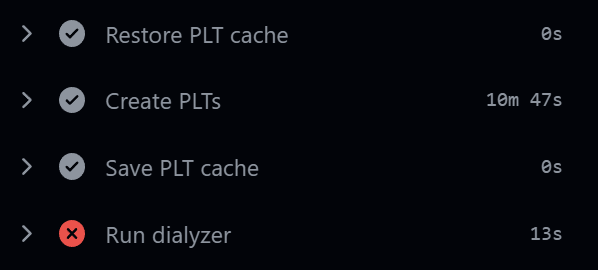

By default, the GitHub Cache action will only save the cache if all steps in the job succeed. But since actions/cache@v3 we can separate the all-in-one action into actions/cache/restore@v3 to restore the PLTs, build them if there was no cache hit, and then finally use actions/cache/save@v3 to save the PLTs even if the Run dialyzer step fails. This way, if a commit that fails mix dialyzer is pushed (which happens to me all the time), the subsequent fix will complete CI much faster.

We haven’t yet fixed our Dialyzer bug, but we saved the PLTs to the cache even though Run dialyzer failed!

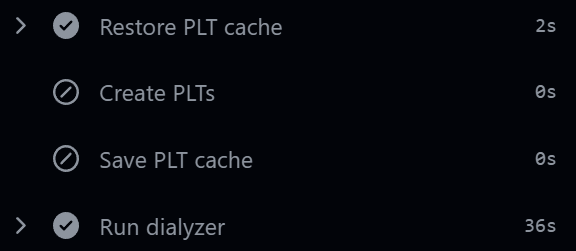

Now let’s push a fix, and see how long it takes to run Dialyzer again:

A fast CI shortens feedback loops and enables developers to move faster. Every minute saved on CI is a minute saved every time a dev pushes a commit. Build time optimization is often overlooked, but can have an outsized impact on developer productivity.